Configure External Database Using Amazon RDS

This guide describes how to use Amazon RDS as your pipelines and metadata store.

Amazon Relational Database Service (Amazon RDS)

Amazon RDS is a managed service that makes it easier to set up, operate, and scale a relational database in the AWS Cloud. It provides cost-efficient, resizable capacity for an industry-standard relational database and manages common database administration tasks. It has support for several engines such as MySQL, MariaDB, PostgreSQL, Oracle, and Microsoft SQL Server DB engines.

Deploy Amazon RDS MySQL in your environment

Before deploying MySQL database using Amazon RDS, let’s get configuration parameters that are needed such as VpcId, SubnetIds and SecurityGroupId.

# Use these commands to find VpcId, SubnetId and SecurityGroupId if you deployed your EKS cluster using eksctl

# For DIY Kubernetes on AWS, modify tag name or values to get desired results

export AWS_CLUSTER_NAME=<YOUR EKS CLUSTER NAME>

# Below command will retrieve your VpcId

aws ec2 describe-vpcs --filters Name=tag:alpha.eksctl.io/cluster-name,Values=$AWS_CLUSTER_NAME | jq -r '.Vpcs[].VpcId'

# Below command will retrieve list of Private subnets

# You need to use at least two Subnets from your List

aws ec2 describe-subnets --filters Name=tag:alpha.eksctl.io/cluster-name,Values=$AWS_CLUSTER_NAME Name=tag:aws:cloudformation:logical-id,Values=SubnetPrivate* | jq -r '.Subnets[].SubnetId'

# Below command will retrieve SecurityGroupId for your Worker nodes

# This assumes all your Worker nodes share same SecurityGroups

INSTANCE_IDS=$(aws ec2 describe-instances --query 'Reservations[*].Instances[*].InstanceId' --filters "Name=tag-key,Values=eks:cluster-name" "Name=tag-value,Values=$AWS_CLUSTER_NAME" --output text)

for i in "${INSTANCE_IDS[@]}"

do

echo "SecurityGroup for EC2 instance $i ..."

aws ec2 describe-instances --instance-ids $i | jq -r '.Reservations[].Instances[].SecurityGroups[].GroupId'

done

You can either use console or use attached CloudFormation template to deploy Amazon RDS database.

Warning

The CloudFormation template deploys Amazon RDS for MySQL that is intended for Dev/Test environment. We highly recommend deploying Multi-AZ database for Production. Please review RDS documentation to learn moreRemember to select correct Region in CloudFormation management console before clicking Next. We recommend you to change the DBPassword, if not it will default to Kubefl0w. Select VpcId, Subnets and SecurityGroupId before clicking Next. Take rest all defaults and click Create Stack.

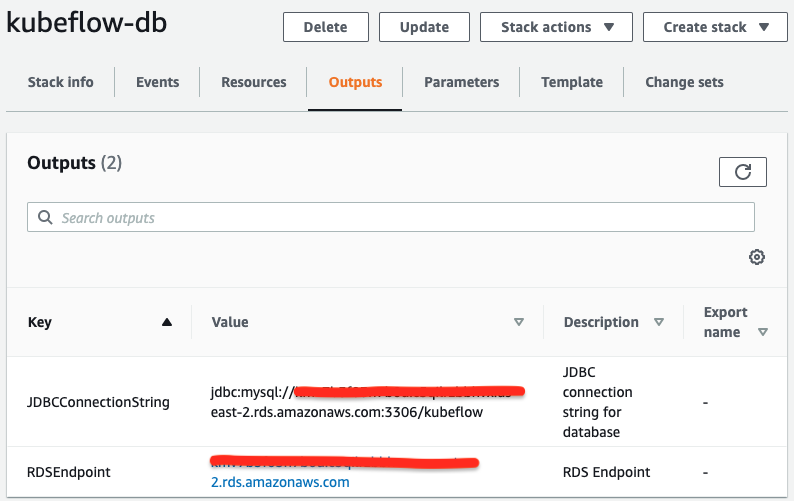

Once the CloudFormation is completed, click on Outputs tab to get RDS endpoint. If you didn’t use CloudFormation, you can retrieve RDS endpoint through AWS management console for RDS on the Connectivity & security tab under Endpoint & port section. We will use it in the next step while installing Kubeflow.

Deploy Kubeflow Pipeline and Metadata using Amazon RDS

-

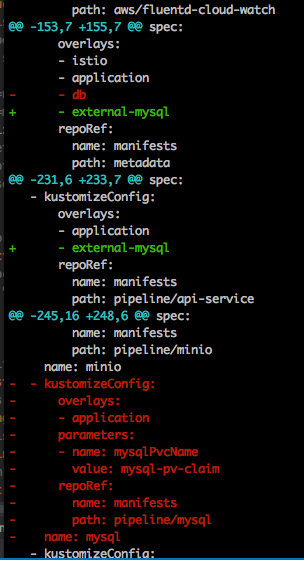

Follow the install documentation until Deploy Kubeflow section. Modify

${CONFIG_FILE}file to addexternal-mysqlin both pipeline and metadata kustomizeConfigs and remove mysql database as shown below.

-

Run the follow commands to build Kubeflow installation:

cd ${KF_DIR} kfctl build -V -f ${CONFIG_FILE}This will create two folders

aws_configandkustomizein your environment. Editparams.envfile for the external-mysql pipeline service (kustomize/api-service/overlays/external-mysql/params.env) and update values based on your configuration:mysqlHost=<$RDSEndpoint> mysqlUser=<$DBUsername> mysqlPassword=<$DBPassword>Edit

params.envfile for the external-mysql metadata service (kustomize/metadata/overlays/external-mysql/params.env) and update values based on your configuration:MYSQL_HOST=external_host MYSQL_DATABASE=<$RDSEndpoint> MYSQL_PORT=3306 MYSQL_ALLOW_EMPTY_PASSWORD=trueEdit

secrets.envfile for the external-mysql metadata service (kustomize/metadata/overlays/external-mysql/secrets.env) and update values based on your configuration:MYSQL_USERNAME=<$DBUsername> MYSQ_PASSWORD=<$DBPassword> -

Run Kubeflow installation:

cd ${KF_DIR} kfctl apply -V -f ${CONFIG_FILE}

Your pipeline and metadata is now using Amazon RDS. Review troubleshooting section if you run into any issues.

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.